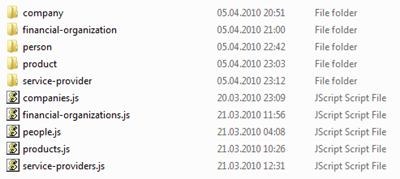

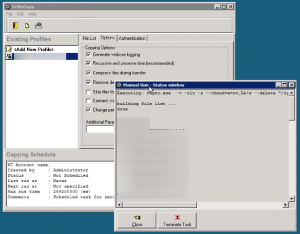

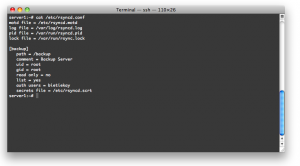

It’s about time to import some data into our previously established object scheme. If you want to do this yourself you want to first run the Crunchbase mirroring tool and create your own mirror on your hard disk.

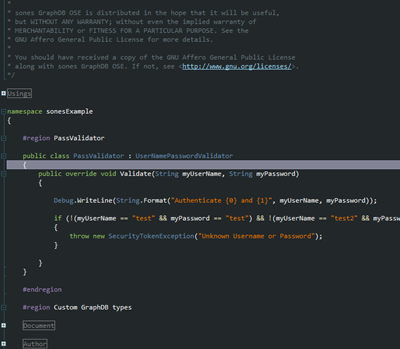

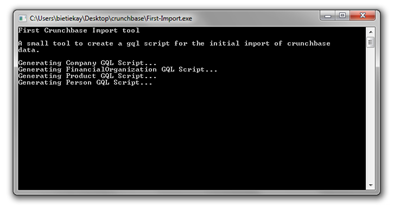

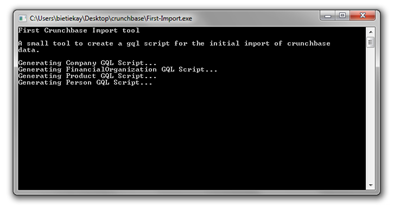

In the next step another small tool needs to be written. A tool that creates nice clean GQL import scripts for our data. Since every data source is different there’s not really a way around this step – in the end you’ll need to extract data here and import data here. One possible different solution could be to implement a dedicated importer for the GraphDB – but I’ll leave that for another article series. Back to our tool: It’s called “First-Import” and it’s only purpose is to create a first small graph out of the mirrored Crunchbase data and fill the mainly primitive data attributes. Download this tool here.

This is why in this first step we mainly focus on the following object types:

- Company

- FinancialOrganization

- Person

- Product

- ServiceProvider

Additionally all edges to a company object and the competition will be imported in this part of the article series.

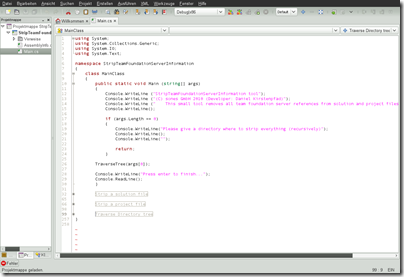

So what does the first-import tool do? Simple:

- it deserializes the JSON data into a useable object – in this case it’s written in C# and uses .NETs own JavaScript deserializer

- it then maps all attributes of that deserialized JSON object to attribute names in our graph data object scheme and it does so by outputting a simple query

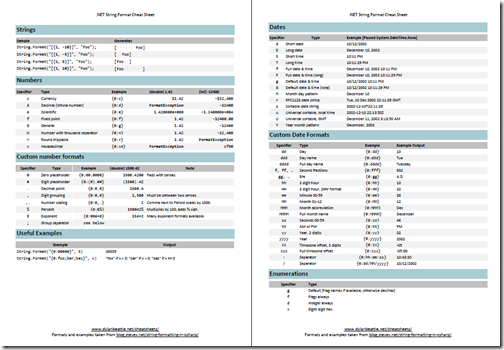

- Simple Attribute Types like String and Integer are just simply assigned using the “=” operator in the Graph Query Language

- 1:1 References are assigned by assigning a REF(…) to the attribute – for example: INSERT INTO Product VALUES (Company = REF(Permalink=’companyname’))

- 1:n References are assigned by assigning a SETOF(…) to the attribute – because we are not using a bulk import interface but the standard GQL REST Interface it’s necessary that the object(s) we’re going to reference are already in existence – therefore we chose to do this 1:n linking step after creating the objects itself in a separate UPDATE step. Knowing this the UPDATE looks like this: UPDATE Company SET (ADD TO Competitions SETOF(permalink=’…’,permalink=’…’)) WHERE Permalink = ’companyname’

For the most part of the work it’s copy-n-paste to get the first-import tool together – it could have been done in a more sophisticated way (like using reflection on the deserialized JSON objects) but that’s most probably part of another article.

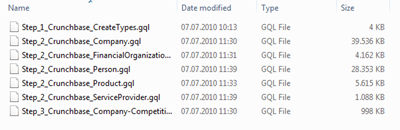

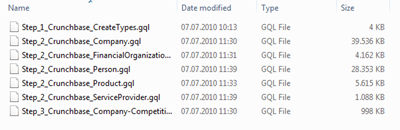

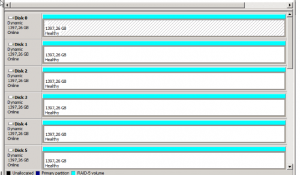

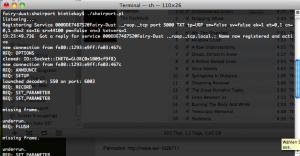

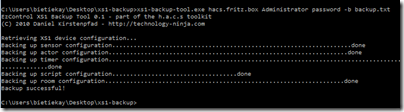

When run in the “crunchbase” directory created by the Crunchbase Mirroring tool the first-import tool generates GQL scripts – 6 of them to be precise:

The last script is named “Step_3” because it’s supposed to come after all the others.

These scripts can be easily imported after establishing the object scheme. The thing is though – it won’t be that fast. Why is that? We’re creating several thousand nodes and the edges between them. To create such an edge the Query Language needs to identify the node the edge originates and the node the edge should point to. To find these nodes the user is free to specify matching criteria just like in a WHERE clause.

So if you do a UPDATE Company SET (ADD TO Competitions SETOF(Permalink=’company1’,Permalink=’company2’)) WHERE Permalink = ’companyname’ the GraphDB needs to access the node identified by the Permalink Attribute with the value “companyname” and the two nodes with the values “company1” and “company2” to create the two edges. It will work just like all the scripts are but it won’t be as fast as it could be. What can help to speed up things are indices. Indices are used by the GraphDB to identify and find specific objects. These indices are used mainly in the evaluation of a WHERE clause.

The sones GraphDB offers a number of integrated indices, one of which is HASHTABLE which we are going to use in this example. Furthermore everyone interested can implement it’s own index plugin – we will have a tutorial how to do that online in the future – if you’re interested now just ask how we can help you to make it happen!

Back to the indices in our example:

The syntax of creating an index is quite easy, the only thing you have to do is tell the CREATE INDEX query on which type and attribute the index should be created and of which indextype the index should be. Since we’re using the Permalink attribute of the Crunchbase objects as an identifier in the example (it could be any other attribute or group of attributes that identify one particular object) we want to create indices on the Permalink attribute for the full speed-up. This would look like this:

- CREATE INDEX ON Company (Permalink) INDEXTYPE HashTable

- CREATE INDEX ON FinancialOrganization (Permalink) INDEXTYPE HashTable

- CREATE INDEX ON Person (Permalink) INDEXTYPE HashTable

- CREATE INDEX ON ServiceProvider (Permalink) INDEXTYPE HashTable

- CREATE INDEX ON Product (Permalink) INDEXTYPE HashTable

Looks easy, is easy! To take advantage of course this index creation should be done before creating the first nodes and edges.

After we got that sorted the only thing that’s left is to run the scripts. This will, depending on your machine, take a minute or two.

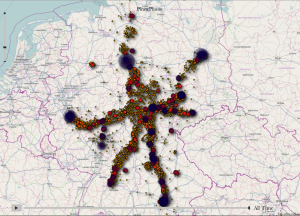

So after running those scripts what happened is: all Company, FinancialOrganization, Person, ServiceProvider and Product objects are created and filled with primitive data types

- all attributes which are essentially references (1:1 or 1:n) to a Company object are being set, these are

- Company.Competitions

- Product.Company

That’s it for this part – in the next part of the series we will dive deeper into connecting nodes with edges. There is a ton of things that can be done with the data – stay tuned for the next part.

![Bildschirmfoto-2010-12-13-um-21.24.0[2] Bildschirmfoto-2010-12-13-um-21.24.0[2]](https://www.schrankmonster.de/wp-content/uploads/2010/12/Bildschirmfoto-2010-12-13-um-21.24.02_thumb.png)